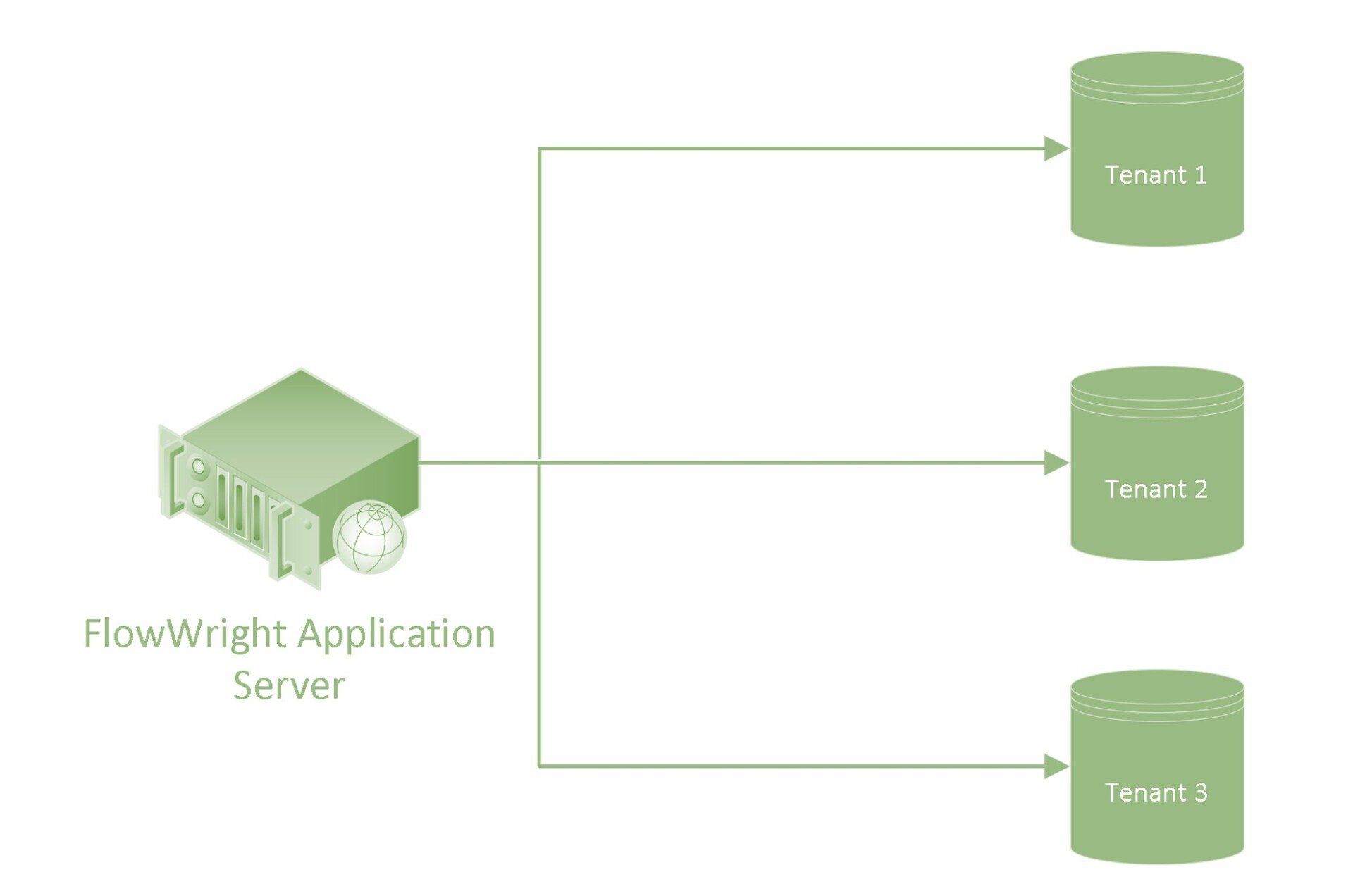

SaaS applications are designed to serve multiple customers (tenants) from a single platform. A multi‑tenant architecture introduces both efficiency and complexity, especially when orchestrating workflows that span thousands of concurrent executions. FlowWright, a high-performance workflow automation platform, tackles this challenge head-on through intelligent engine design, a resilient execution model, and a self-healing optimization system.

Our technical team takes a deep dive into the components that power our scalable, multi‑tenant workflow engine—including configuration refresh, child process isolation, auto‑optimization techniques, and how the system ensures long-term stability under real-world loads. Let's get into it.

Why Multi‑Tenant Workflow Engines are Hard to Scale

A traditional monolithic workflow engine processes workflows in a single-threaded or tightly-coupled manner. When multi‑tenancy enters the picture, several new challenges emerge:

- Tenant Isolation: Data and process separation between tenants.

- Dynamic Configuration: Each tenant may have different rules, forms, decision tables, and APIs.

- Concurrency Handling: Thousands of workflows might be running simultaneously.

- Error Containment: A failure in one tenant’s workflow should never impact another.

- Adaptive Optimization: The engine must detect slowdowns and rebalance resources on the fly.

FlowWright solves these challenges not through brute force, but by designing for scale at the engine level.

1. Dynamic Engine Configuration Refresh

One of our key innovations lies in its dynamic configuration refresh system. Here's how we differ.

Traditional Approach:

Legacy workflow systems often require engine restarts to load updated configurations—like new form schemas, REST APIs, or integration credentials. This is disruptive and introduces downtime.

FlowWright's Approach:

FlowWright employs a hot-reload mechanism that periodically refreshes its runtime configuration without stopping the engine. This means:

- Engine reads centralized tenant metadata tables every few seconds.

- Any change in process definitions, API endpoints, or security rules is picked up in near real-time.

- The engine invalidates internal caches and refreshes only affected modules.

- A signature hash system ensures unchanged configurations are not reloaded unnecessarily.

This feature enables zero-downtime configuration updates, which is critical for DevOps teams deploying CI/CD changes across multiple customer environments.

2. Child Process Execution Model

To scale and isolate process workloads, we introduced the concept of child process instantiation within the engine.

Use Case Example:

A master workflow initiates 1,000 subprocesses—each responsible for invoice validation per region. If all logic is embedded in the parent, it becomes bloated and unscalable.

How FlowWright Handles This:

- The parent process dynamically spawns child processes with different parameters.

- Each child is executed independently in a parallel thread pool.

- Parent-child linkage is maintained for status tracking but does not introduce runtime dependency.

- Failures in child processes are isolated, logged, and can be retried or escalated without affecting siblings or parents.

Benefits:

- Load Distribution: The engine balances execution across cores and nodes.

- Fault Isolation: Child failures don't bubble up unless explicitly configured.

- Reusability: Common logic like approval routing can be modularized into reusable subprocesses.

This approach allows users of FlowWright to scale out horizontally without overloading the main execution thread, making it ideal for multi‑tenant SaaS platforms where each tenant may trigger hundreds of concurrent operations.

3. Auto‑Optimization at Runtime

An often-overlooked component in workflow execution is runtime self-optimization. Our platform includes several intelligent behaviors that tune engine performance in real-time.

Dynamic Sleep Throttling

Each engine thread evaluates queue depth and system CPU usage. If the system is under heavy load:

- The engine temporarily reduces polling frequency (sleep).

- Threads sleep in a staggered pattern to avoid race conditions.

This prevents the engine from overwhelming the system during peak hours, making it adaptive to load spikes.

Priority Queueing

Workflow tasks are internally queued and processed using a priority-based scheduler. Tasks can be prioritized based on:

- Tenant type (e.g., enterprise vs. freemium)

- Workflow criticality (e.g., financial vs. marketing)

- SLA commitments

This means FlowWright can guarantee QoS for high-value tenants while still servicing others efficiently.

Hotspot Detection

FlowWright's telemetry module monitors process node execution times. If certain steps or integration points (e.g., API calls) are detected as bottlenecks, the system:

- Flags the node in logs.

- Temporarily increases retry intervals.

- Alerts the admin via dashboard with actionable insights.

Over time, these insights help engineering teams tune custom workflows or replace slow external integrations.

4. Multi‑Tenant Stability Mechanisms

In SaaS, one noisy tenant can destabilize the whole system—unless proper isolation and throttling are in place. FlowWright provides the following stability enforcements:

Per-Tenant Quotas

- Each tenant has a configurable limit for concurrent workflows, API calls, and background threads.

- If the limit is exceeded, new workflows are delayed (queued) or rejected with proper messaging.

- Prevents tenant overuse from affecting global stability.

Circuit Breakers for External APIs

FlowWright integrates with numerous external systems (DocuSign, SAP, Dynamics, etc.). If one of them goes down:

- A circuit breaker trips after N failures.

- Calls are paused and queued.

- Retried after backoff periods using exponential logic.

This prevents the engine from burning threads on unresponsive dependencies.

Tenant-Specific Logging

Logs are segmented per tenant for easier diagnosis. Using tenant keys, developers can drill down into specific execution histories without noise from other tenants.

5. Deployment and Containerization

To support microservice architectures, FlowWright’s engine can run in containerized environments with full orchestration support via:

- Docker: Engines run in lightweight, isolated containers.

- Kubernetes: Workflows scale automatically across pods based on queue depth and system load.

- Cluster Health: Built-in engine heartbeat checks notify Kubernetes if a pod becomes unresponsive, triggering a replacement.

This makes it possible to scale in our platform to thousands of tenants and millions of executions with minimal infrastructure overhead.

6. Fail-Safe Design Philosophy

Our enterprise workflow automation software is engineered with a fail-safe-first mindset. Even under adverse conditions—such as a corrupted workflow, a failed DB cluster, or a misconfigured tenant—FlowWright ensures:

- No infinite loops: Node timeouts and max retry limits.

- No data corruption: All state transitions are transactional.

- No silent failures: All exceptions are captured in trace logs and optionally routed to external monitoring systems like Datadog or BetterStack.

The result is predictable failure behavior—an essential characteristic of enterprise-grade workflow platforms.

Customer Examples

A global manufacturing company uses our platform to automate over 700+ workflows across 12 divisions, with 3,500+ users executing more than 2 million workflows annually. Their configuration includes:

- 20+ child workflows triggered per purchase order.

- SLA-based prioritization for critical procurement processes.

- Per-tenant rate limiting to isolate departments by usage profile.

- Auto-scaling Kubernetes deployment with auto-healing enabled

Scaling a multi‑tenant workflow engine is not simply about adding more servers—it’s about smart architecture, autonomous optimization, and failure resilience. FlowWright brings all these capabilities together in a purpose-built platform designed to support high-throughput, secure, and dynamic process automation.